Our current centralized logging solution is Logz.io: Cloud Observability for Engineers and most of our application logs are sent there from the k8s cluster. In addition, we use the logging system’s alert mechanism to trigger and send alerts to various sources, including email, Slack channels, etc. Most of the logging systems have very convenient methods of sending logs, including Filebeat/Metricbeat, agents, APIs, etc.

The problem

When we have several dozens of Lambda functions, we need to solve the problem in a generic way to reduce the amount of work and migration required by the developers who work with FaaS technology on a daily basis.

Possible solutions

-

Code-wise solution - We can create a wrapper/library that any FaaS dev can use. This would require going over all the different existing Lambdas and changing the code, redeploying, and testing. Also, since we are not language-agnostic, for each runtime we would need to write some code to process the logs and then send them to our centralized logging system

-

Use logging-system agents - Some of the systems have their own agents, which take control over the stdout/stderr of the process and send them to the logging system. But here we encounter another problem of being tightly-coupled to our logging system - which means that our code actually understands the fact that we chose a very specific logging system. When we have a change (not necessarily changing the logging system itself, but perhaps they upgraded their agent or changed something in the way they work), we will need to iterate again over all our Lambda functions and update them.

-

Use AWS CloudWatch triggers - We can force each Lambda to fire logs to CloudWatch and collect logs from there using a trigger to a Lambda. At least here we will not be tightly-coupled to the logging system, but we do force a redundant cost because we save the CloudWatch logs + logging system logs. Also, if for some reason we deploy a service which is not AWS specific, then it would be harder to create CloudWatch logs just for the sake of the logs being processed into the logging system.

Final solution

For the final solution, we chose to have a segregation between our code and the logging system specifics. We initiated a Kinesis Stream, which is a generic process that anyone with AWS SDK and appropriate AuthZ can access and send logs in a specific format.

Each application/Lambda should send the logs to the Kinesis Stream (this would be deployed per AWS account per region) - there will be a Lambda that is triggered by the Kinesis Stream, and this Lambda will send the logs to the logging system.

This is how we avoid being tightly-coupled to the logging system (only the specific Lambda will need to be changed and deployed). We can control a fallback mechanism to CloudWatch logs in case of a disaster, and we don’t need to redeploy all the Lambdas.

How do we plug in the currently deployed Lambda functions?

We built a Lambda Extension using Lambda Layers technology, which is basically a filesystem that is added to each Lambda that uses this Lambda Layer by ARN. A Lambda Extension can actually listen to the Lambda Logs API, which is an internal API that can be used to fetch the stdout/stderr of the Lambda function and process them towards the Kinesis Stream.

In that sense, you don’t need to change your Lambdas, but only to attach the specific Lambda Layer to the Lambda functions you want to log. Also, we are language-agnostic - since we use HTTP protocol to fetch the logs, we need to maintain only one extension instead of writing one for any runtime we use in our Lambda functions.

Moreover, this is shareable cross-organization in all our accounts, which means that we don’t need to redeploy the Lambda Extension to each account and region, but rather use the ARN and share it cross-organization.

For Lambda functions that are container-based, we cannot use Lambda Layers by design. In order for this to work, we will have to edit the Dockerfile that the Lambda is based on, adding a few lines of code that will copy the extension inside this container.

You can visit the “Logging container-based Lambdas“ section of this article for an example.

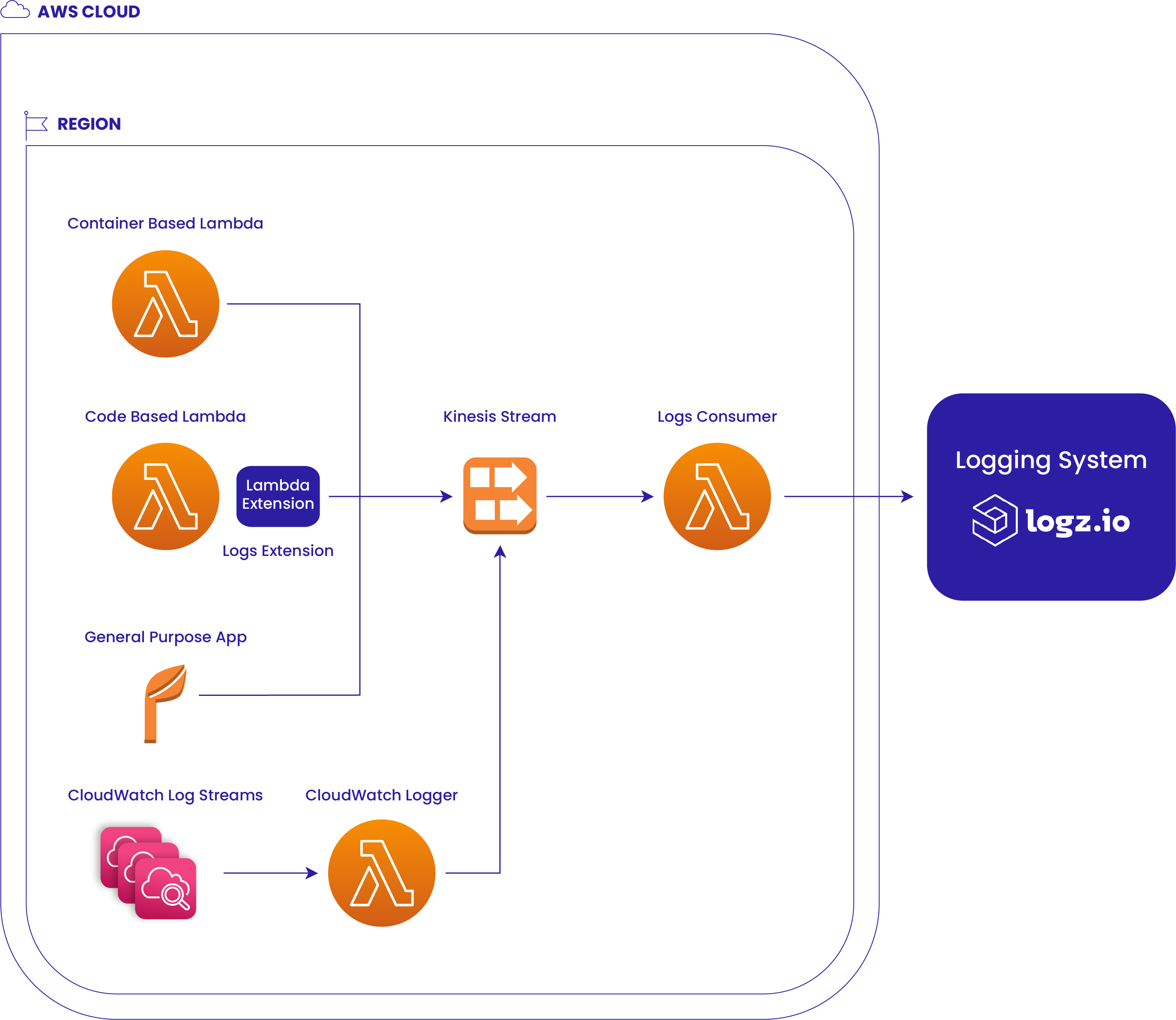

HLD: Diagram

Explanation

We can integrate each App to send logs to the Kinesis Stream. A container-based Lambda can actually reuse a specific image that has the same filesystem of the Lambda Extension that sends logs to the Kinesis Stream.

A code-based Lambda can be attached to a Lambda Layer, which sends logs toward the Kinesis Stream, and a generic App can use the AWS SDK in order to send logs to the Kinesis stream.

The generic code that sends the logs to Kinesis Stream should be extracted into a library for Optibus, which will do one thing → Send the logs to the appropriate Stream. Currently, the code is embedded inside the Lambda Extension.

Usage

Deployment to a new account/region

Deployment of Logs Consumer

This part is being done once per account per region. If you don’t have the solution (Kinesis Stream + Logs Consumer) deployed in your region, you will need to:

-

Make sure you have a key in KMS named infra-key

- Create an appropriate secret for the consumer Lambda, as we need to store API Keys of the logging system in a safe place (Secret Manager). Secret MUST be tagged with “secret_app”: “logzio” as the Logs Consumer code uses tag filtering (instead of a specific ARN) to search for a secret.

{

token: <LOGZIO_API_KEY>

}

-

Clone armada git repository

git clone git@github.com:Optibus/armada.git -

cd into apps/logs-consumer folder

cd armada/apps/logs-consumer -

Install dependencies

npm ci -

Log into the CORRECT AWS ACCOUNT AND REGION

saml2aws login -a <AWS_PROFILE_NAME> --session-duration=<SESSION_DURATION IN MS>

export AWS_PROFILE=<AWS_PROFILE_NAME>

export AWS_REGION=<AWS_REGION_NAME> -

Run the deployment script of the Logs Consumer Solution

npm run deploy

Deployment of CloudWatch Logger Lambda

This part is being done once per account per region. If you don’t have the solution (CloudWatch Logger) deployed in your region, you will need to:

-

Clone armada git repository

git clone git@github.com:Optibus/armada.git -

cd into apps/CloudWatch-logger folder

cd armada/apps/CloudWatch-logger -

Install dependencies

npm ci -

Log into the CORRECT AWS ACCOUNT AND REGION

saml2aws login -a <AWS_PROFILE_NAME> --session-duration=<SESSION_DURATION IN MS>

export AWS_PROFILE=<AWS_PROFILE_NAME>

export AWS_REGION=<AWS_REGION_NAME> -

Run the deployment script of the Lambda Consumer Solution

npm run deploy

Deployment of Logs Extension

Logs Extension Layer

Logs Extension Lambda Layer should actually be deployed once per organization per region, as it is a shareable resource. All the AWS accounts under this organization can attach it to their Lambdas, but each Lambda must use an extension that is located in the same region.

Steps:

-

Clone armada git repository

git clone git@github.com:Optibus/armada.git -

cd into apps/logs-extension folder

cd armada/libs/logs-extension -

Install dependencies

npm ci -

Log into the CORRECT AWS ACCOUNT AND REGION

saml2aws login -a <AWS_PROFILE_NAME> --session-duration=<SESSION_DURATION IN MS>

export AWS_PROFILE=<AWS_PROFILE_NAME>

export AWS_REGION=<AWS_REGION_NAME> -

Run the deployment script of the Lambda Extension Layer

npm run deploy

Logs Extension Image

The Logs Extension Image is a docker image that is used when building the container-based Lambda images. We need it, as the extension files are located inside it, and we will change our Lambda’s Dockerfile to import this image, copy its files, and then the logs will automatically be sent to the Kinesis Stream.

Steps:

-

Clone armada git repository

git clone git@github.com:Optibus/armada.git -

cd into apps/logs-extension folder

cd armada/libs/logs-extension -

Install dependencies

npm ci -

Log into the CORRECT AWS ACCOUNT AND REGION

saml2aws login -a <AWS_PROFILE_NAME> --session-duration=<SESSION_DURATION IN MS>

export AWS_PROFILE=<AWS_PROFILE_NAME>

export AWS_REGION=<AWS_REGION_NAME> -

Log in to the CORRECT ECR

ecr_login <AWS_REGION_NAME> -

Run the deployment script of the Lambda Extension Image

npm run deploy-image -- <AWS_ACCOUNT_ID> <AWS_REGION> <IMAGE_TAG>

Logging code-based Lambdas

Now, after you validated that the solution is deployed, you can start using it by:

-

Adding the policy named logs-stream-put policy to your Lambda’s Role - this policy allows your Lambda to use PutRecord and PutRecords actions in order to send logs to the Kinesis Stream

-

Attach the Lambda layer to the Lambda function

Via Console

Go to Lambda Service → Click on your target Lambda that you want to send logs from → Configuration → Permissions → Click on the Lambda role → Attach Permissions… → Choose the logs-stream-put policy with appropriate correct region from the list (it should show up if you deployed the solution correctly) and save.

After completing these steps, go back to your Lambda function, scroll down to the layers section, click Add Layer → take the layer ARN and attach it to your Lambda (make sure you use the latest available version of the Lambda layer, and that it is located on the same region!). From the next Lambda invocation you should expect to see logs in the logging system according to what key is stored in the Secret Manager (prod/dev).

arn:aws:Lambda:us-east-2:account_id:layer:logs-extension:1

Via AWS CDK

Add the policy to your Lambda using this code snippet:

// CDK Stuff...

// Your target Lambda function declaration using CDK objects

const handler = new Lambda.Function(

//...

);

// add the managed policy which allows your Lambda to send logs to Kinesis

handler.role?.addManagedPolicy(

ManagedPolicy.fromManagedPolicyName(

this,

`logs-stream-put-${process.env.CDK_DEFAULT_REGION}`,

`logs-stream-put-${process.env.CDK_DEFAULT_REGION}`

)

);

Attach the layer using the CDK

// CDK Stuff...

// Your target Lambda function declaration using CDK objects

const handler = new Lambda.Function(

//...

layers: [

LayerVersion.fromLayerVersionArn(

this,

"logs-extension-layer",

"arn:aws:Lambda:us-east-2:account_id:layer:logs-extension:1"

),

],

);

Logging container-based Lambdas

First, attach the logs-stream-put policy using either console or CDK as shown in the “Logging code-based Lambdas” section.

After that, edit the Dockerfile code of the image your Lambda is using like this example →+ ARG LOGS_EXT_REGISTRY

+ ARG LOGS_EXT_TAG

+ FROM --platform=linux/amd64 $LOGS_EXT_REGISTRY/logs-extension:${LOGS_EXT_TAG} AS layer

FROM --platform=linux/amd64 public.ecr.aws/Lambda/nodejs:14

+ WORKDIR /opt

+ COPY --from=layer /opt/extensions ./extensions

+ COPY --from=layer /opt/logs-extension ./logs-extension

# Function code

WORKDIR /var/task

COPY app.js .

CMD ["app.handler"]

All the lines that begin with the “+” sign should be added to your original Dockerfile code. So, as you can see, we have here some build args, such as LOGS_EXT_REGISTRY which will point to the registry where the Lambda Extension Image is located, and LOGS_EXT_TAG to choose the version of the image, in case we made some updates and increment the version number. It implies that when you build your function docker image, you will need to add these build args in CI/when you build your image locally.

docker build --build-arg LOGS_EXT_REGISTRY=746419327481.dkr.ecr.us-east-2.amazonaws.com --build-arg LOGS_EXT_TAG=latest //... bla la

As you can see, we are copying the files from the extension image to your image so that Lambda will init the extension and start collecting your logs and sending them to the Kinesis Stream, without requiring you to touch your code.

Logging general Apps

Use AWS SDK in order to make a call to the Kinesis Stream located under your region and send it the logs in the appropriate format of array of this interface →interface ILog {

time: string;

app_name?: string;

app_type?: string;

app_region?: string;

message: string;

}

Logging via CloudWatch log streams

If you have Lambda functions/applications that don’t support Lambda extensions, and you don’t want to change the code of the application in order to send logs towards the Kinesis Stream, you can use the CloudWatch Logger Lambda, which is listening to CloudWatch logs streams and pushing these logs automatically to the Kinesis Stream.

In order to attach a log group to the CloudWatch Logger Lambda, you should:

-

Open the desired Log Group under the CloudWatch service (if this is a Lambda, you can go to the Lambda, click on Monitor, then on View Logs in CloudWatch)

-

Click on the Subscription filters tab

-

Click on Create → Create Lambda subscription filter

-

Choose the Lambda function that you would like to trigger when a new log stream is being pushed with new logs (CloudWatch Logger)

-

Enter a generic name in the Subscription filter name field

-

Press Start streaming

That’s it - from now on, the current Log Group will be streaming logs towards the Kinesis Stream!